Many Microsoft customers are now taking steps to try and modernise and centralise SaaS app identity by using Enterprise Applications within Azure AD to provide authentication, provisioning and reporting services.

This can be done by Administrators by adding applications into the AzureAD tenant and assigning users to them, or by Users (if you let them) who can self-service applications (think the Log in with Facebook / Google buttons). Applications which are added will have certain permissions assigned which will allow said application to be able to access AzureAD properties via the Microsoft Graph API.

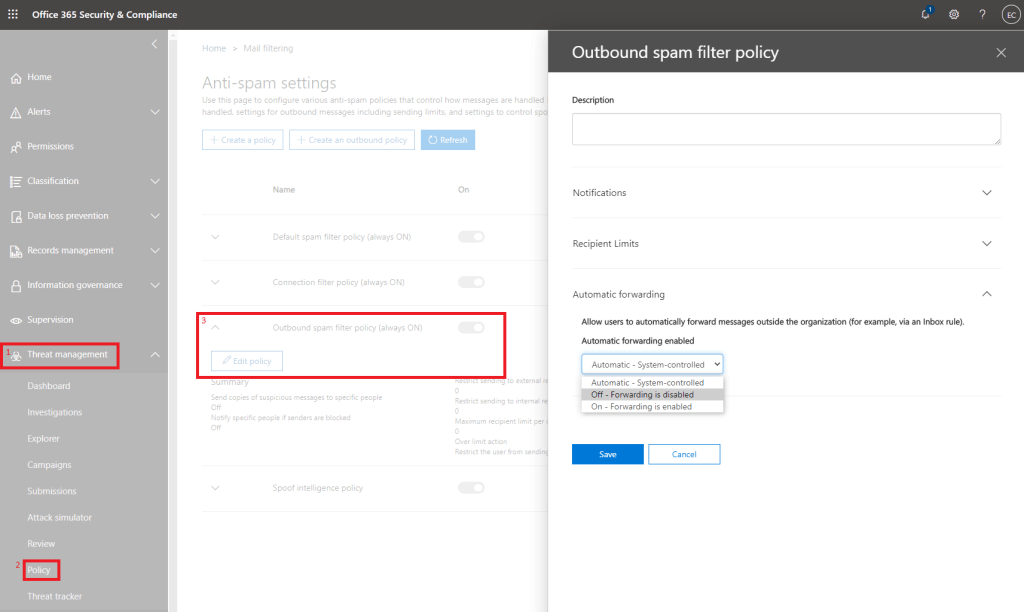

These permissions can be as simple as allowing the application to read the users displayname, all the way to having full access to all files which the user can access in Office 365. You can see these permissions in the GUI by logging onto portal.azure.com and navigating to Azure Active Directory>Enterprise Applications>Application Name>Permissions, as seen in the screenshot below. We can see that the Adobe Document Cloud application has had Admin consent to have full access to all files the user can access, and to sign in and read user profile. You can see the full range of available permissions in the Microsoft Graph, and what they all mean here.

This GUI feature is great for looking at individual applications, but if you are allowing users to provide consent themselves, or you are making full use of the Enterprise Applications feature, you are likely to have many applications listed here, and checking them one by one using the GUI is not efficient.

As always, PowerShell is able to come to the rescue. If we connect to the AzureAD v2 Powershell module by using Connect-AzureAD, we can export these permissions. Unfortunately, because of the way the data is presented, we need to do a little data massaging to make this possible.

Firstly, we need to get a list of all applications, and this can be done using:

Get-AzureADServicePrincipal | Select DisplayName,Homepage,ObjectID,AppDisplayName,PublisherName,

ServicePrincipalType | Export-Csv c:\reports\azureadsp.csv

This PS command will get a list of all the Service Principals (read: applications) you have configured, however it will not list the permissions. We need another cmdlet for that. The item we are most interested in for the Service Principal is the ObjectID, as this is the value we can use to map the Service Principal to the Permissions.

The next PS command we need is:

Get-AzureADOAuth2PermissionGrant | Select ClientID,Scope,ConsentType | Export-CSV :\oaauthperms.csv

This PS command will get a list of all the permissions granted in AzureAD. The important value here is the ClientID, which refers to the application, and the Scope, which refers to the permission level as described in the Graph Permissions article.

With this data we have two .csv files, and we need to compare the ObjectID from azureadsp.csv with the ClientID from oauthperms.csv. If we find a match, we need to copy the Now I’m no Excel expert, and there are probably better ways of doing this, but this was my method.

I copied the columns from azureadsp.csv into the oauthperms.csv. Let’s say the ObjectID value from azureadsp.csv ended up on row J. I would then create a new column called Application Name, at column A. I then used the INDEX, MATCH formula to look for identical ObjectID and ClientID values, and if a match was found, populate the Application Name.

The formula used looks like this:

=INDEX($H$2:$H$101,MATCH($B2,$J$2:$J$101,0))

Substituting the column names for logical names looks like this:

=INDEX($DisplayName$2:$DisplayName$101,MATCH($ClientID2,$ObjectID$2:$ObjectID$101,0))

This gives us a value in Application Name which shows us the application which has been given rights to the Microsoft Graph and can enable us to easily see and filter which permissions have been given to which application. This can be used for management purposes, reporting and security auditing.

Hopefully this is useful for you, and if you think this could be improved upon please let me know in the comments!